XKCD's Reaction Maps with n-gram matching

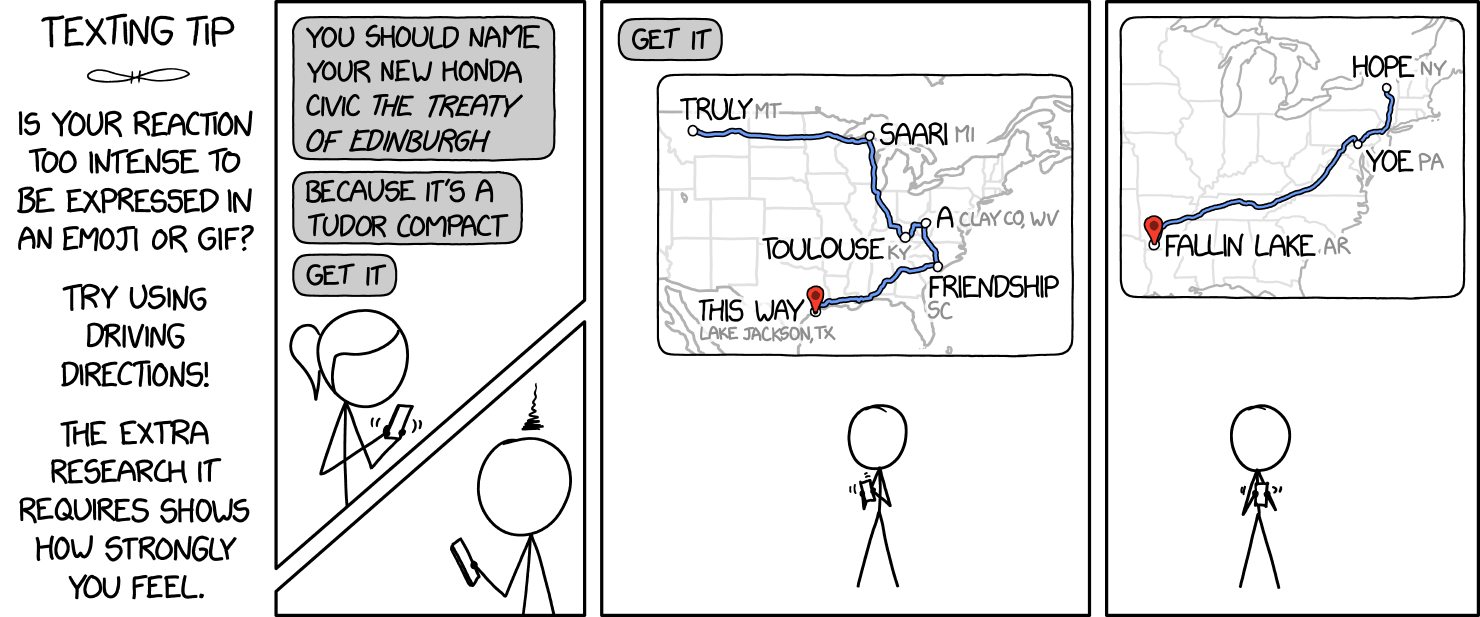

I got a good laugh out of this recent XKCD strip, partly because I love puns, and partly because it's pretty hypocritical for Cueball to express his disdain for a one with 9 more:

© Randall Monroe, 2020

Then it started to get me thinking. Getting a solid string of homophonous place names has to be pretty hard to do manually. Could a computer do it better? I should mention Randall has cautioned on the danger of thinking about problems. Or maybe the fun of making other people think about them. And sure enough, I was picked off cleanly.

The long and short is that I cancelled all my appointments for the week (all 0 of them) and made a phrase-to-map-directions converter at maps.lam.io.

I strongly suggest saying the result out loud a few times without gaps between words to see the effect, and your mind will click onto the meter needed to approach your phrase.

Depending on your standards jokes and their byproducts, you may see the where the results seem to try, but fall short of the mark a decent proportion of the time. Well, while I see this as merely a "suggester" of your next road trip to the doghouse, I really think part of the problem may legitimately be that it's hard to find homophones from places.

Ultimately, I'm satisfied with the quality-speed tradeoff of the first prototype. I think generally, place names might not make very good words. And that's maybe why we named them that way.

So all that aside, how does this work?

General approach

At its core, the problem is really this: find an efficient way of sampling the power set of place names $\mathcal{P}(L)$ that will fit in the target phrase of length $N$, i.e. $\{l \in \mathcal{P}(L) | \sum{ len(l_i) } \le N \}$. The solution space is way too big for brute force of course. With millions of place names, and phrase length to place name ratios of 5 or more, there are easily trillions of trillions of combinations.

Not to mention that there is an immediate win to this generally, just by dynamic programming: we step through the phrase and right-align the candidate term, and use the best result up to where the start of the candidate term would land. In other words, over the set of places L, the target string t of length N, and the scoring function for two substrings $s(\cdot, \cdot)$:

$$C[n] = max_{l\in L} \left\{ s(p,t[n-len(l):n]) + C[n-len(l)] \right\}$$

This reduces our effort to $\mathcal{O}(|L|N)$ which is better, but is still in the order of tens (N) of millions (|L|) of queries.

I believe this is as far as an exact solution can get. As far as I've reasoned, impossible to be greedy and exact, because this necessitates an order of processing the string (e.g. making a decision of the subset to narrow to from a set of characters) whereas an exact solution must consider the string entirely at once. In other words, you can always be cutting out the optimal solution except in very narrow cases (e.g. not enough string left for some terms to catch up). This is where we have to start approximating.

This solution: n-gram similarity

The best I could come up with so far works with phonemes. This particular version uses the last three phonemes (3-gram) of the target phrase to look up similar-sounding 3-grams. These are linked to places that terminate with exactly that 3-gram. Then with this subset I find the best match. Nice and simple.

So how does it perform? First, as you might expect at a glance, it is definitely going to be faster than an exact solution. I use all 39 phonemes from the ARPABET that are found in the CMU dict project, so there are 39^3 = 59319 3-grams. For each I pre-computed 40 of the most similar 3-grams to make the final sample size consistent and large enough.

More specifically, I started with a phoneme similarity matrix [1], then used a multi-dimensional scaling algorithm to embed this matrix 2D space, then concatenated the coordinates of each phoneme into 6D, and performed nearest neighbour on each term with a kd-tree.

At query time, this translates to a few exact index hits on tables with around 1000000 rows, for each of the approximately 10-100 phonemes in a phrase. Not shabby. The pre-computation was also pretty reasonable, taking less than an hour on my Macbook.

Well, I want better.

Okay, so we're only using a small chunk of strings for this first big narrowing step. What's the tradeoff? Looking at the histogram of number of phonemes:

This is a little concerning. We're far below the mean, so most of the string will follow the unconditioned statistics of arbitrary string matching. Here is an estimate of that distribution:

This was generated by sampling elements from the similarity matrix and adding them, then sampling that result set and repeating, creating effective n-gram samples of length 2^k for k iterations.

We see the mean is around 0.2, and of course converges for more phonemes. Yikes. For reference, P (for "pit") and F (for "fit") have weight 0.26. Of course, we don't care about the mean directly: for each phoneme of the target, we are looking for the best match out of our sample. This turns into a maximum distribution with cdf $F_n = (F_1)^n$, and with the finite support $[0, 1]$, the distribution gets tighter and moves to the right with more samples.

How many samples? Let's look at the distribution of the number of place names per n-gram:

The mean is 266 names/n-gram (Zipf law parameter: 0.722). Given our sample 40 n-grams, this gives us around 10000 place names. I'm shaky on the maximum distribution of a sample of 10000 with finite support, so for now I can only offer the observation that sampling from the unconditioned 8-gram distribution matches pretty closely to the actual typical score from running the program, hovering around 0.5 for the matches beyond the first 3 exact ones.

So how can we improve this?

- We can sample more points, although because 1 is an asymptote, the gains must be slowing down, while user impatience is superlinear in time probably... [definitely].

- We can increase the $n$ of our n-gram, but anything above $n=4$ — where the number of permutations is $39^4 = 2.3\times 10^6$ — is unreasonable for complete coverage. Complete effective coverage is perhaps more reasonable: based on the Zipf law slope, we can cut twice the n-grams ($10^{1-0.722} = 1.89$) for every word we sacrifice.

Ultimately, we have a few fundamental tradeoffs. There's obviously search speed vs. result quality. Then there's the time, memory and indexing speed from pre-computation. The goal of pre-computation here is to add more context to the decision of reducing the sample space. N-grams for example guarantee a far-above-average score for the last n phonemes. My motivation to pursue n-grams is that they are very fast and improve scores directly and verifiably. In particular, I'd like to know if there are any strong correlations between small pieces of context and large strings, even if they are complex and language-dependent. The first to come to my mind didn't have enough power to make much of a difference, e.g. phoneme ↔ word length (to condition on the memoizing array $C$).

-

1/31/2020: I am running a 4-gram at the moment, will see how it does.

-

2/06/2020: How much farther is there to the top? To find out, I brute-forced a corpus of Reddit comments for the best possible solution with the place names I've got. I also compared this to the 3-gram's performance.

Alg Avg. score/phoneme N 3-gram $0.80 \pm 0.05$ 369 Brute-force $0.88 \pm 0.03$ 74

The fact that the 3-gram is actually pretty close to the exact solution hints at the fact that most matching terms don't tend to be much longer than n-grams long, and the sample size of 10000 additional places makes up for the rest. And all things considered, the exact solutions aren't all that great either. Some brute-forced solutions of random sample Reddit comments are in the below aside:

Code

The code is hosted on GitHub. It includes the data massaging scripts, database schemas, and server/client code.

A shoutout to the projects that made this possible:

- Data from the GeoNames gazeteer for US city names during development;

- The North American OSM dataset for the current place name & coordinate data;

- The CMU Pronuncing Dictionary and CMU's d2p-seq2seq to get pronunciations in terms of phonemes;

- The work "Similarity and Frequency in Phonology" [1] for the consonant-consonant phoneme similarity measure (note: I personally sounded out and guessed the vowel phoneme similarity matrix);

- scikit-learn's MDS and a kd tree to group n-grams (tuples of phonetic units);

- The languages of the stack: PostgreSQL + Node + React, with Leaflet, OSM and OSRM.

[1] Frisch, S. (1996). Similarity and Frequency in Phonology. Unpublished doctoral dissertation, Northwestern University.

- Use it

- GitHub